A(P)I OS: The Future of Software in an AI-First World

How AI will transform apps, interfaces and the way we work

Last time I wrote an extensive piece about how current LLMs will never be generally intelligent and what limitations exist in their current architecture that prevent real human creativity. In this article, I want to talk about the long-term opportunities for AIs and LLMs and the capabilities we might see.

AIs will eat current UX through natural interfaces

A major shift on the horizon is that AIs are poised to revolutionize current user experience (UX) paradigms through the adoption of natural interfaces. This signifies a departure from the conventional reliance on screens as the primary mode of interaction, leading to a new era where voice interactions, gestures, and intuitive gestures become the norm.

For example, OpenAI's advanced voice mode for Chat GPT demonstrates a conversational experience that closely mimics natural dialogue. Moreover, AI models like Flux for image generation and video AIs such as Hailou or Kling exemplify the transformative capacity of AI in processing and interacting in innovative ways with humans.

Currently, these AI capabilities are rather segregated into different applications, but they are poised to become integrated at some point, blending into each other and making human-AI interaction a truly immersive experience. With the advancement of AI capabilities, users will interact with their devices in a more seamless, human-like manner, reducing friction and enhancing natural accessibility.

AI inference will become a commodity

A second significant trend is AI inference becoming a commodity, akin to the transformative influence of Moore’s Law in the realm of microprocessors. Similar to how we witnessed a rapid escalation in computing power and efficiency, AI is set to undergo an analogous journey.

The efficiency gains in AI training are part of a broader trend that showcases the evolution of AI capabilities over the decades. The foundational architecture of AIs which originated in the 1960s, laid the groundwork, but it wasn’t until the arrival of the transformer architecture in 2017 that training large models became feasible—a pivotal shift in AI development. This progression was highlighted by OpenAI in 2020 with their introduction of GPT-3, which demonstrated the usefulness of large language models in the form of ChatGPT.

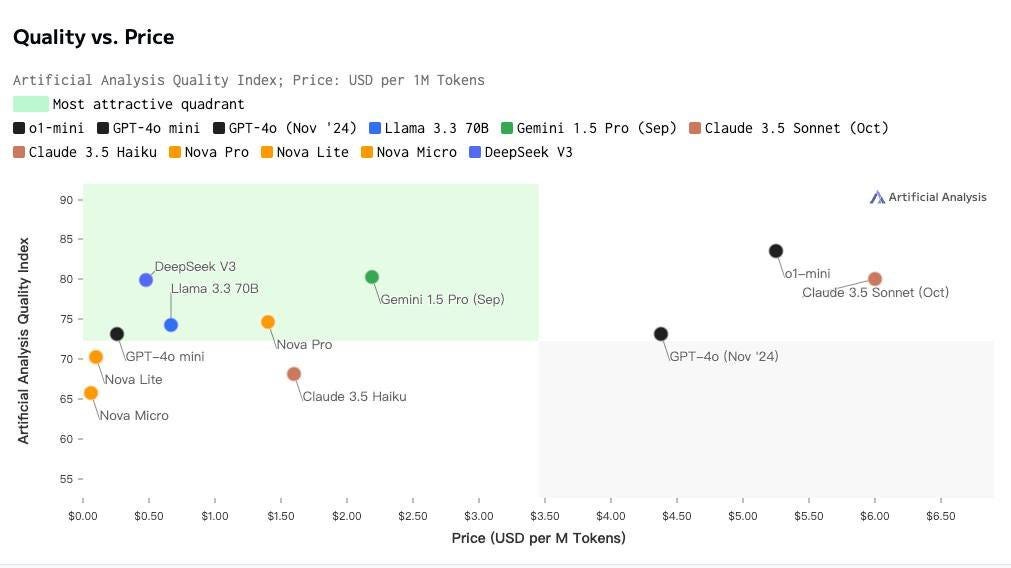

More recently, DeepSeek’s models have gained significant attention for revolutionizing the training efficiency of AI tools and large language models (LLMs). This is underscored by a notable decrease in the cost per token for the latest models, as illustrated in the accompanying chart, further emphasizing the trend toward making AI technologies better in quality and cheaper to access.

This commoditization is paving the way for widespread adoption and democratization of AI-powered tools. If we extrapolate this trend into the future, these AI technologies will become even more powerful and increasingly cheaper, leading to AI being deployed anywhere.

A(P)I OS

If we consider these two trends—AIs transforming UX through natural interfaces and getting deployed anywhere— I think it’s kind of obvious to imagine a future where any computing device will be seamlessly interacted with via natural means. This transformation will see AI, VR, AR, and voice assistants converge into universal computing access points.

This will lead to the internet eventually becoming even more of a data layer than it already is. Even today, one can already use AI powered search engines and process vast amounts of content without visiting a single website.

The winner products of this new era will be those products and services that expose data via an AI-friendly, well structured API. These interfaces will allow AIs to easily get access to data and to act as a natural interface layer between the internet and the human users.

Dismantling traditional software products

I mentioned earlier that the biggest shift we will see is the disappearance of screens as a primary means of input. So, for example, instead of opening PowerBI to plot a chart, the LLM will render that chart for you. Instead of writing an SQL query, you will just ask for data. Instead of opening Photoshop for an edit, you will just ask an AI to make the change for you.

One can experience this today with the so called vibe-coding movement. You can try this out yourself: Download a AI code editor like Cursor or Visual Studio, which have a Copilot feature integrated. Then, install a voice-to-text app (I like Superwhisper on my Mac). Even without being able to write a single line of code, you can now just ask the code editor to write working personal software for you. It’s quite an unbelievable experience.

Now imagine this playing out for any software: No more manual updating a project plan, a customer account or a medical record. Just ask the LLM to do it.

Any software product will be affected by this, as natural interactions will not just change how we interact with software, it will shift the encoded knowledge from the graphical interface into the natural interface, ie the LLM. The new paradigm will be: Need anything? Just ask for it.

Implications on Software

Software as we know it is about to undergo a fundamental shift as well. Traditionally, software development has been a rigid process—code is written, compiled, and deployed to serve a fixed set of functions. But with LLMs, software is becoming dynamic, adaptable, and even conversational. Instead of navigating complex interfaces or writing custom scripts, users will simply describe what they need, and AI will generate, modify, and execute code in real time.

This evolution turns software into a fluid, ever-changing entity—no longer constrained by pre-built menus and rigid workflows but shaped on demand by natural interactions. Need a custom data analysis tool? Just ask. Want an automation script tailored to your business? The AI will generate it.

At the same time, information retrieval will become nearly instantaneous. Instead of manually searching through files, emails, or databases, AI will access a vast, continuously updated knowledge layer. With vector databases indexing everything from personal notes to enterprise documentation, answers will be surfaced instantly, contextually, and in ways that feel like second nature. The days of digging through folders for a specific information will be as outdated as dial-up internet.

Conclusion

If all of this sounds familiar, it’s because we’ve seen it before. In the early days of the internet, many ambitious ideas failed—not because they were wrong, but because the technology wasn’t ready. Companies tried to build online marketplaces, social platforms, and cloud-based applications long before broadband, mobile computing, and cloud infrastructure made them viable. Today, we are witnessing the same pattern with AI-driven hardware like the Humane Pin and Rabbit R1. These products struggle not because the vision is flawed, but because the underlying ecosystem and technology isn’t mature enough—yet.

But history suggests that the technology will catch up, and when it does, it will transform how we interact with computers in ways we can barely imagine today, hand in hand with new, innovative business models. Just as computing moved from distant mainframes to personal desktops, then from desktops to smartphones we carry everywhere, the next step brings it even closer—to AI-powered interfaces that sit on us, live in our ears, and overlay our world with intelligent, real-time assistance.

This shift is not just about convenience—it’s about making technology disappear into the background, freeing us from rigid interfaces and making interaction as natural as a conversation. The AI-first paradigm will fundamentally reshape software, turning applications into invisible, omnipresent services that we summon on demand. And as we move into this future, the winners will not be those who cling to screens, but those who embrace AI as the new interface layer between humans and the digital world.